Run VeloFill with Ollama and Gemma 3

Spin up Gemma 3 through Ollama, point VeloFill at your localhost endpoint, and keep sensitive data inside your network.

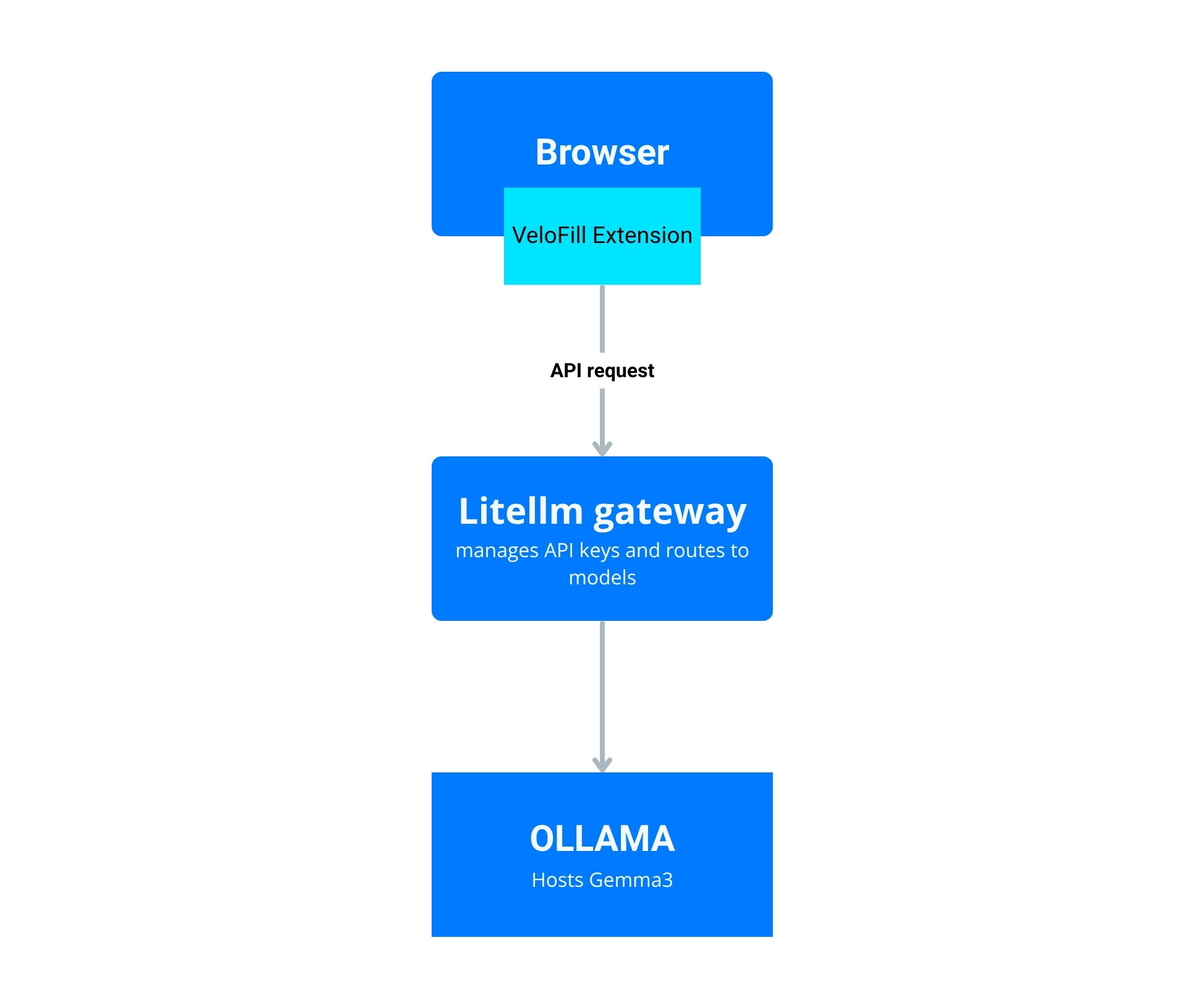

Looking for more advanced features? This guide covers the simplest way to connect VeloFill to a local model. If you need to manage multiple models, enforce stricter security, or centralize logging, see our guide on routing VeloFill through LiteLLM.

Why pair VeloFill with Gemma 3

Running Gemma 3 locally gives you fast responses and keeps regulated data off public clouds. VeloFill speaks the same OpenAI-compatible API as Ollama, so with a few tweaks you can level up your form automation without sending prompts over the internet.

Prerequisites

- A machine with a GPU that has at least 9 GB of VRAM for the 9B model. 16 GB of system RAM is a minimum, with 32 GB recommended.

- Ollama installed and running on macOS, Windows, or Linux.

- VeloFill extension installed in Chrome, Edge, or Firefox.

- Optional but encouraged: GPU acceleration for faster inference times.

Step 1: pull Gemma 3 through Ollama

- Open a terminal on the machine hosting Ollama.

- Ensure the Ollama application is running. If it was installed as a service, it should already be active. If not, you can start it manually with

ollama serve. - Download the Gemma 3 model:

ollama pull gemma3:9b - (Optional) Test the model locally to confirm it responds:

ollama run gemma3:9b "Summarize VeloFill in two sentences."

Tip: If you have limited GPU memory, try

gemma3:4b. The instructions below work the same way, just swap the tag where needed.

Step 2: expose the Ollama API

Ollama listens on http://127.0.0.1:11434 by default, meaning it only accepts connections from the same machine. If VeloFill is running on the same machine as Ollama, you do not need to change anything.

Warning: The Ollama API provides unrestricted access by default. Do not expose the Ollama port directly to the public internet, as this would allow anyone to use your model.

If you need to access Ollama from a different workstation, use a secure method like an SSH tunnel or a reverse proxy that can add an authentication layer.

Step 3: point VeloFill at Gemma 3

- Open the VeloFill extension and choose Options → LLM Provider.

- Select OpenAI-compatible / Custom endpoint.

- Set the endpoint URL to

http://127.0.0.1:11434/v1. - Leave the API key field blank unless you put Ollama behind a proxy that enforces keys.

- Under Model ID, enter

gemma3:9b(or the tag you pulled earlier). - Save the configuration.

Optional: adjust model parameters

These parameters can be adjusted directly within the VeloFill extension’s LLM Provider options screen.

- Max tokens: Gemma 3 9B handles up to 8K tokens comfortably; cap requests to avoid latency spikes.

- Temperature: Start at

0.2for deterministic autofill responses; increase gradually for more creative copy. - System prompt: Reinforce internal style guides or classification instructions to keep outputs on-brand.

Step 4: validate the setup inside VeloFill

- Open a low-risk form (newsletter signup, sandbox CRM, etc.).

- Trigger the VeloFill autofill workflow.

- Watch the status panel—responses should show Gemma 3 as the active model, returning in a couple of seconds.

- If nothing happens, open the browser devtools console and look for network calls to

/v1/chat/completions. A200response confirms the integration is live.

Troubleshooting checklist

- Connection refused: Ensure

ollama serveis running and that firewalls allow port 11434. - Model download slow: Use

ollama pull gemma3:4bon lower-bandwidth connections and upgrade later. - Latency high: Reduce prompt size, lower max tokens, or move the model to a machine with a stronger GPU.

- Prompt privacy: Keep the Ollama host on your internal network. Avoid port forwarding directly to the public internet without authentication.

Keep iterating

- Layer VeloFill knowledge bases so Gemma 3 can reference your private SOPs.

- Schedule periodic model refreshes when new Gemma 3 builds release through Ollama.

- Pair with LiteLLM if you want a unified gateway for multiple local models.

Need a guided walkthrough?

Our team can help you connect VeloFill to your workflows, secure API keys, and roll out best practices.